We can't find the internet

Attempting to reconnect

Something went wrong!

Hang in there while we get back on track

Claude wrote me a TUI and all I got was this stupid TUI

Writing is hard. I usually overthink it.

Late one night, after mindlessly feeding the highlights of a day hike into a Slack thread, I had a punch-drunk inspiration: writing under similar constraints and conveniences, could I barf out a blog post almost as easily?

Rather than compose my next article in Slack DMs with myself, I went for the second-most-practical proof of principle: creating a new tiny writing app that emulates the microblogging experience.

I already had a gaggle of unfinished projects. But I was feeling good about Claude Code, and easily sniped by what looked like a quick vibe-coding side quest. So off I went!

Initial spec

I had a pretty clear set of basic requirements, as a user:

- A basic editor for composing short entries. Entries should drop into a “thread” that I can spit out into a single Markdown file when I’m ready to call it a draft.

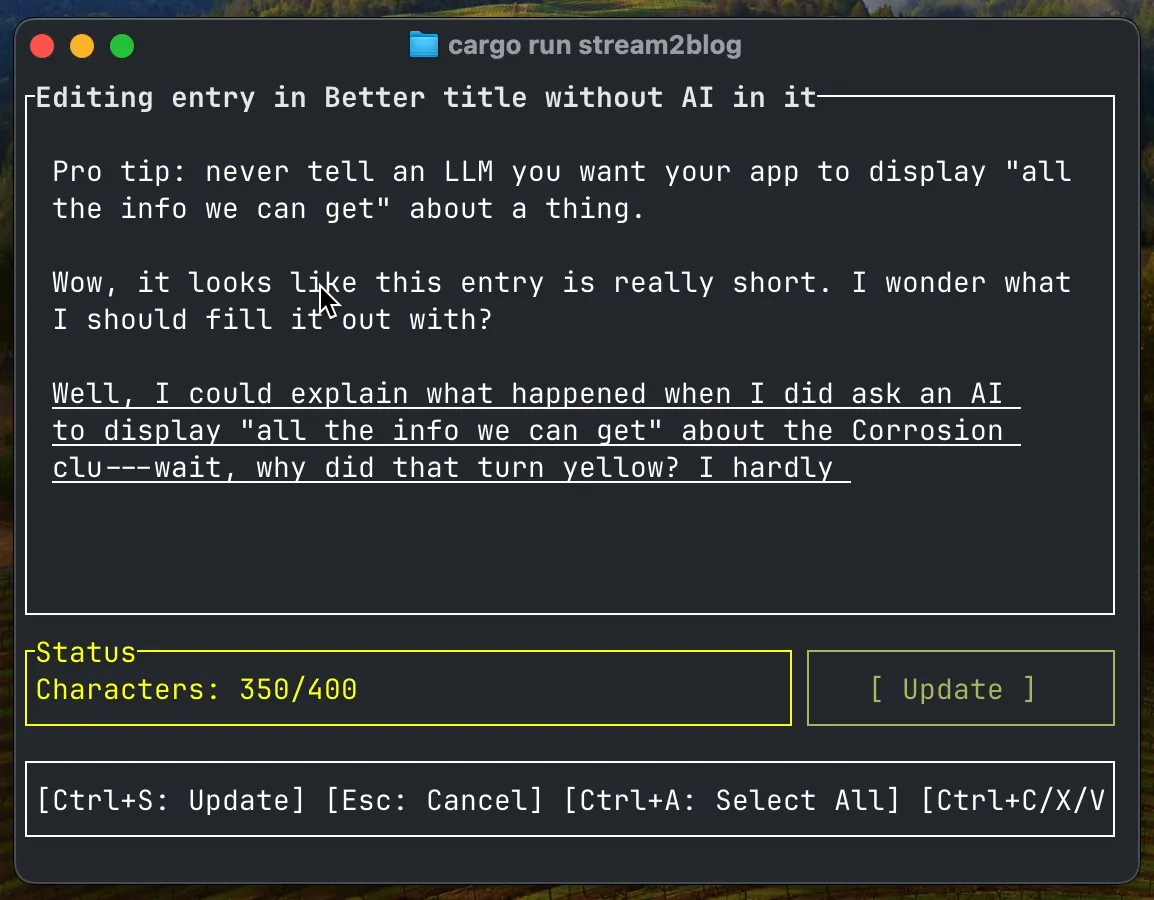

- A hard character limit per entry, complete with colour-changing character counter to ratchet up anxiety in inverse proportion to conciseness.

- Some stupid-simple way to add a single image to an entry. Ideally: paste from the OS clipboard. With LLM help, it’ll be easy to add resizing and conversion to WebP, or whatever makes it easier to get from train-of-thought to published on my blog.

Scheming

For a break from the browser, and to satisfy my craving for novelty, I decided on a text-based user interface (TUI).

A quick web search turned up strong TUI libraries for Go, Python, and Rust (Bubble Tea, Textual, and Ratatui, for respective example).

The novelty factor tipped the balance to Rust. Bonus: that means this can be a Rust client and Elixir/Phoenix storage API backed by SQLite, a Bizarro twin for the Phoenix-app-with-state-in-a-Rust-program that I bumped to the back burner to work on this.

For the record: Claude assured me that this was a brilliant design.

Fast-forward

I started by working out, with Claude, design docs for the TUI and the Elixir storage app.

From there I tried to let Claude generate the code, with appropriate interactive planning and feedback. Vibing was particularly easy at first when there was nothing but a green field, Claude Code, and a language I couldn’t so much as read a function signature in.

I don’t have anything new to say about the process. Any specific observations will be stale by…well, by now, probably. So let’s skip forward.

So, it worked

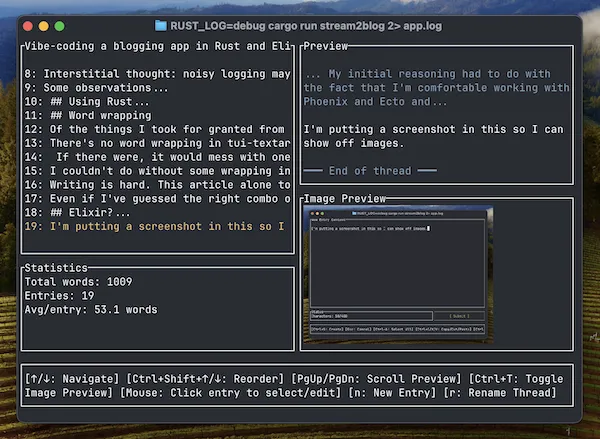

A few days later, there was a working (if buggy) blogging application that compromises very little on my original spec. It’s nice to use!

Ratatui, tui-textarea, Ratatui-image, Arboard, and Textwrap do most of the lifting.

The main twist was that tui-textarea doesn’t do word wrap, and it was easier to fork it to use the Textwrap library than to do text wrapping on top of it. I’ll put up some notes on that part later.

After the vibes fade away

Was “vibe coding” a smart way to go about this?

(Trick question.)

Smart? No. The land of Rust and Ratatui has a really good map! Ratatui and friends are well documented, with abundant examples to crib from. But I’m a tourist, and Claude can’t think about the whole map at once.

If I wanted to do this effectively, and I really wanted to use Rust, I should have used the map myself, and let LLMs accelerate my learning.

But what did I really want?

I wanted the app. I wanted to touch Rust (but not too much).

And…I wanted to see what Claude could do. I wanted to test the machine. Which was particularly easy when there was nothing but a green field, Claude Code, and a language I couldn’t so much as read a function signature in.

I really did get the best one could hope for:

- A usable app, without understanding everything that was going into it

- A candy-coated dose of Rust

- An imaginary trophy for me and Claude and all the libraries I used. We did it, yay.

Here’s a side effect: now there’s this neat progam full of bugs and antipatterns that I have only the merest inkling about, and I couldn’t in good conscience suggest anyone use it or fork it.

Worse, I forked one of my dependencies to overcome the longest-standing GitHub issue on that project, and haven’t got anything good to offer back upstream. Who knows what nonsense is in there, and which other features I broke? Not me.

What about the Elixir backing app?

Looks like I vibed that one for real. Claude is so comfy with Phoenix and Ecto that I’ve hardly had to look at the storage app. No human brain cell has been bothered about a SQLite schema here.

But can you barf out a blog post now?

Of course not. Writing is hard. This article alone took days of vibe coding.

Are my constraints and conveniences good for anything? So far, I’m encouraged. I used the new app to bootstrap this post. It helps me keep track of the shape of a thread without showing it all to me at once. I can start new entries to add words, but the per-entry character limit actively reminds me to encapsulate my point.

It can still be tempting to organise a single thread into sections and move entries between them, which is a danger sign.

The convenience of pasting images is gratifying, but I haven’t really had occasion to put it through its paces, and there’s still a bug or two around removing them. I still think it’ll be cool.

If I use this to write another post after this one, I’ll say I was on to something.

Appendix: A smattering of Summer 2025 souvenirs (coding-with-LLMs-wise)

I’d now trust Claude.ai or Claude Code to generate a one-off API client in a language I can read (thus test and debug), without the buffer of getting it to write a program to do the conversion.

What I wasn’t appreciating in March of this year is that these interfaces are not simple wrappers for an LLM—while I’m not sure if Claude.ai was unequivocally agentic at that point, it is now.

Miscellany:

-

Lazy cheat code: ask “why.” When debugging, Claude sometimes gets fixated on the first possibility it sees from wherever its attention is, and goes ahead with useless changes. When Claude seems to be having a bad day, asking it to explain why the program is behaving like that, before asking it to solve anything, can help keep it out of that rut. I haven’t added this to my CLAUDE.md yet, but I probably should.

-

Alternate lazy cheat for bad days: ask “how does the module handle

$WHATEVER“ when you know it’s doing$WHATEVERwrong, and sometimes it’ll figure it out without you having to complain about symptoms. -

Claude likes to add diagnostic logs. I’m glad it doesn’t spontaneously remove logging, I guess, but I wonder if noisy logging makes a material difference to token usage or can pollute the context meaningfully. So I try to keep those from getting too wild. Similar for compilation warnings, which I otherwise have a tendency to let build up.